For the past three months I’ve been trying to make entire songs using only pre-recorded samples which has resulted in a new album available now on Bandcamp. There are a hundred songs on this album, all the result of combining samples using some software that automatically generates songs from sample pools.

Each song combines over a dozen samples, each from a different artist. Each song is a paracosm: a random imagined world emerging from a combination of creators.

Though there are many songs, they all follow a similar theme which I would vaguely describe as glitched ambient jungle usually with instrumentation from synths, strings, pianos and saxophones. The album can be listened in order from track 1 to track 100 - each song changes key from one to next according to the circle of fifths to promote a sense of progression. Or listen in any order you want.

the process

My goal for this album was to utilize a process that used only pre-recorded samples. I usually avoid this type of process, for various reasons. (Mostly I like performance-based music akin to my musical-origins of playing piano). But my motivation for this project was to immerse myself in my own personal reluctance.

To start, I needed samples. I curated a catalog of pre-recorded samples by randomly selecting things I liked from splice.com. I spent $110 to collect all the samples I needed to make the entire album.

The process of manipulating samples into a song can be laborious - it requires taking a sample in a different key or tempo and stretching it to the target tempo, re-pitching it, trimming it to the right length, adding the right effects, splicing it in with crossfades in the right way, etc. For a hundred song album, this would mean doing these microedits thousands of times.

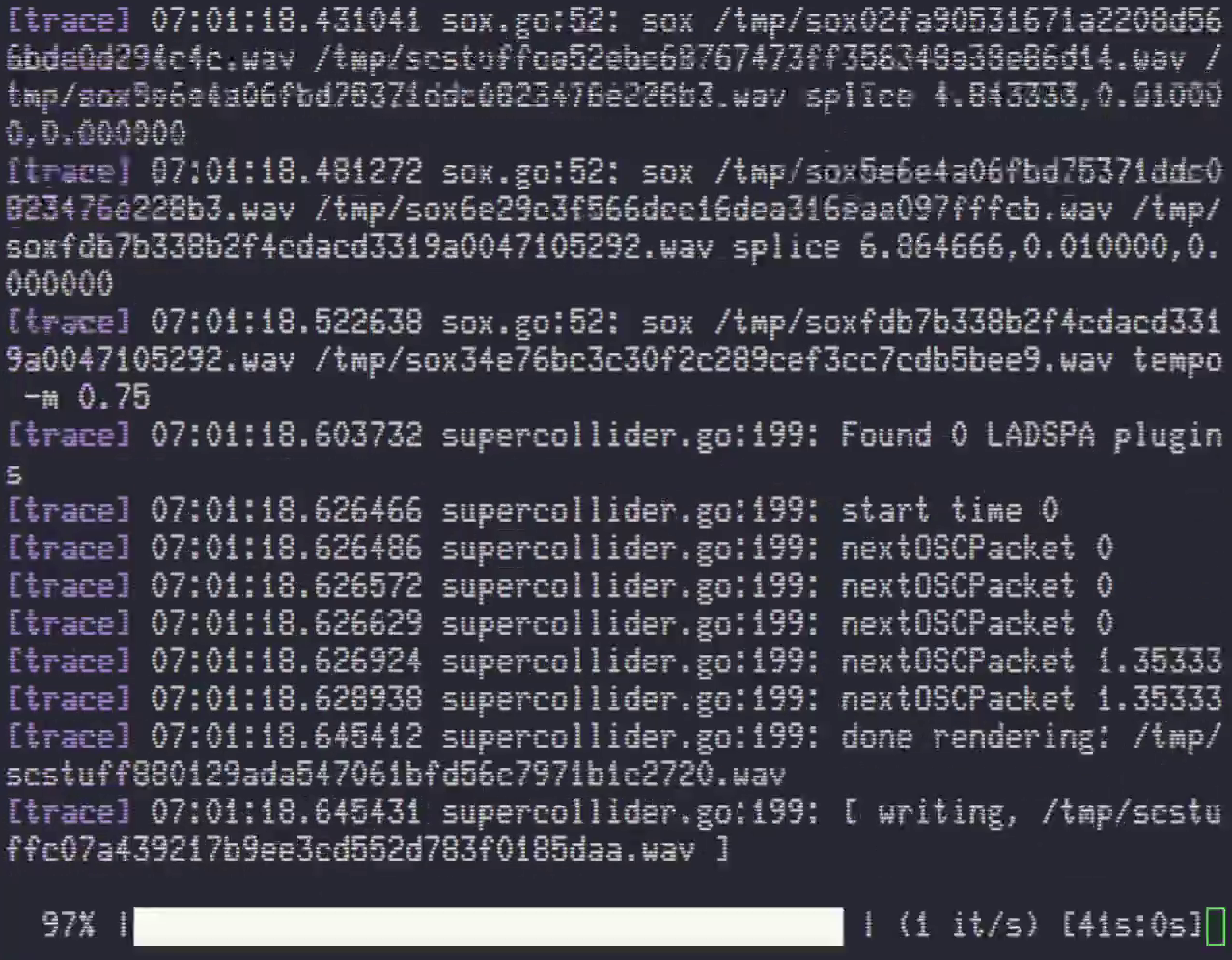

To avoid the tedium in using samples, I wrote a program I call raw (github.com/schollz/raw). The “daw” is familiar as the “digital audio workstation” but this audio workstation made almost all decisions stochastically so I call it the random audio workstation" (e.g. raw). raw randomly chooses which samples to use, how to layer the samples, how to do transitions, and which effects to add. The decisions are all based around probabilities so the same set of samples might create an entirely different song. I have control over the probabilities but not much else.

I didn’t write the entire program from scratch - raw was built upon freely available open-source music tools - sox (the music swiss army knife) and SuperCollider (a powerful music coding language). I used sox to perform all the splicing / stretching operations / effects, and I used SuperCollider for additional special effects like special gated delays and filter ramps.

(raw is a more sophisticated version of a norns script I wrote called sampswap which itself is a more sophisticated version of a norns script I wrote called makebreakbeat).

more process

I realize that my process of pooling random samples and juxtaposing them randomly is a bit alternative so I thought I’d describe in detail pieces of it.

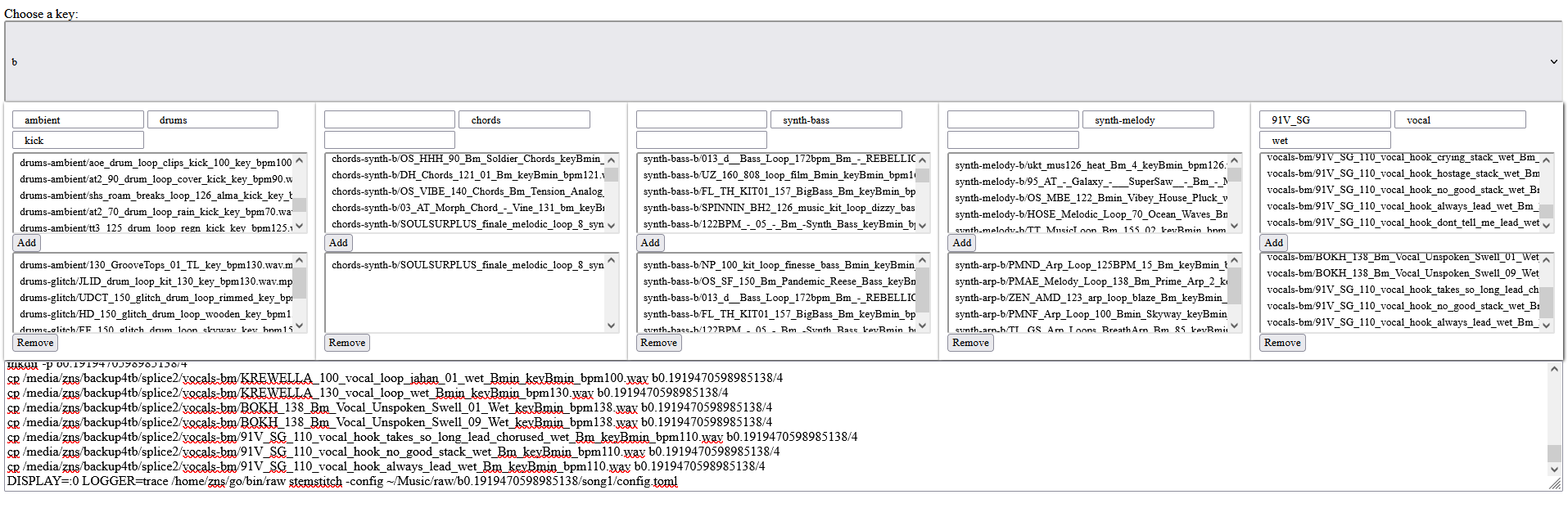

I have all of my downloaded samples in a single directory. To make matters easier I renamed each file to include its tempo. I auditioned songs by listening to them back in a web browser that pointed to the directory of samples. This little website I made that has code that is so poorly written I don’t bother to share it.

The screenshot of the website for sample auditioning is above. Its a very basic UI that I can scroll through my samples in parallel and play them back to see which pool of samples might work well together. Its really dumb simple - written in Vue and it generates a bash script that will copy the necessary files into a new directory so that they can be utilized by raw.

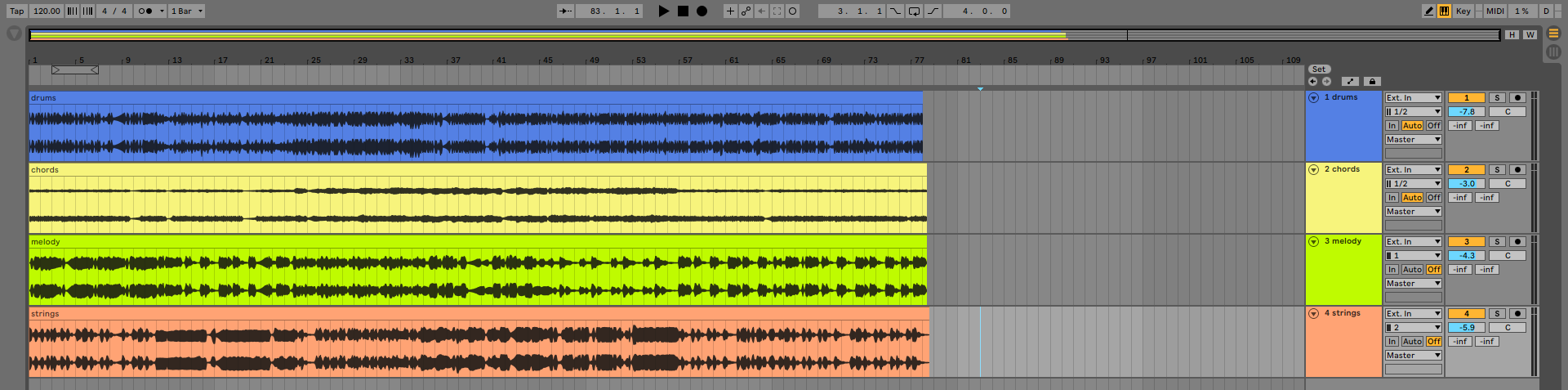

After that I simply run raw which generates 4-5 stems: one for drums, bass, chords, melody and sometimes vocals. This process takes 1-2 minutes to generate everything. The end result are 4-5 music files, each is a single stem for each part (drums, bass, chords, etc.), and each is the length fo the final song.

The last thing to do is to take these stems and mix them together in a way that makes sense. I usually prefer to make the drum sounds louder and vocals louder in the mix. To do this I just open them in Ableton and edit the mixing levels.

In Ableton I don’t do much - the stems each contain the entirety of one instrument of the song. After that I listen through, fix any pops that occurred from the splicing program, and render it!

Each song took between 15-30 minutes to go through the entire process. I probably generated around 300 songs for this album (rejecting >60% of them) so it was easily about 150 hours of work. If I wasn’t automating most of the steps I imagine it would’ve taken much much longer.

the art in the art

during this process I was thinking - what is the art in this process? I had no part in creating the samples - they are all pre-recorded from other musicians who sent them to splice.com. and though I wrote software to make the songs, the software itself works through randomness and makes the main choices (which samples to layer, which effects, when) without my input. So where’s the artist input?

late into the process I found that I could maintain a sense of “artistic direction” by harnessing three things within my control:

- curating of the pool of samples.

- choosing of the tempo.

- mixing the final five stems.

They seem fairly subtle, but actually in my process they had a big impact.

The software I wrote will layer samples from the pool randomly, but the pool of samples is created by me. it took a few dozen songs to get the hang of this, but I found I would need to select a pool of samples that can intermingle in a variety of ways, otherwise it can end up sounding like multiple radio stations playing simultaneously.

Also I found that my chosen tempo made a big difference - sometimes tempos were fast and sounded like a mishmash of radio stations and sometimes they were so slow they just plodded. I spent a lot of time just trying to figure out the right tempo for each track. Usually I generated a song two or three times to get one that I liked.

Another realization I had was that this whole process is basically a lesson in mixing. my software didn’t mix them - it just outputs things at the levels they were prerecorded. I could get better results by working to mix them (really just manipulating five numbers, but boy those numbers make a big difference).

enjoy

In the end I enjoyed the process (though only because it was automated) and I am enjoying the resulting album. This album has been my go-to listening for months and I’ve listened all the way through almost a dozen times. maybe you will enjoy it too. feel free to pwyw or just download it with a code:

bandcamp codes

wcse-cqst pj4b-w8cb 8fsl-c24w 2d44-xdvs h6su-bcdl h34x-xhwe hdes-j5d3 q46c-grss fc3c-34hd whxq-cmpc cqc9-wtbw crhu-cbl4 sxcx-xcva s4ps-jwtb psfs-yw3x hxpe-wpc8 jc9f-c2s2 6s2l-wdhc n4lj-hcpw les6-5c3s c6h2-bwdl cncd-xxwe cdjc-vpd3 phg4-g2ss rcu4-3lhd dswj-cjpc 6fhx-wbhw uw4s-hwms xpps-5wyd 49fc-3s2h 43d4-c4wv cxqq-wac8 e487-cbs2 gsnx-wchc 2hwp-hwpw deh8-5w3s s8c3-3sdl h4j4-jsyb jcb4-yd2x fhxh-bhsq l4cj-wjct qesr-cysb g74d-w3hw 3lsc-xsms wpq4-esyd s6r4-3d2h s3lh-clwv sdpj-wec8 74us-bx4d xcwp-wvec 4jhg-w8rw 4fcn-crx4 4dhl-xdma cheh-jdyb ec7h-yc2x bsls-bxsq dhsp-wvct pe4b-w8sb 8rsl-crhw xq49-5d2s hgsu-bdwl 4u4x-xhde 4les-jew3 qs6s-y3cs bh3c-344d wcxq-cqec cpc9-wtrw sfhu-c2x4 swcx-xcma ssps-jcyb hnls-wxdv hwpe-wps8 qh9f-crc2 942l-wd4c nslj-hdew wjs6-5c2s cgh2-bcwl c2cd-xxde sljc-j5w3 pcg4-grcs xhhq-wmst jqc7-ctcb 6rhx-wb4w nx4s-hcvs lqps-5wtd 48fc-bw3h cud4-c4dv cwqq-was8 es87-c2c2

The codes can be redeemed at https://infinitedigits.bandcamp.com/yum .

btw, the cover art

The artwork for the album utilizes Disco Diffusion, an AI that can create artworks from text. The text I chose is artwork of Savlador’s Dali’s terrariums. Dali wrote extensively about the importance of keeping spider terrariums which were like little paracosms meant to deliver inspiration.