Text-based MIDI sequencer

I coded a new MIDI sequencer that has a high-level syntax for simultaneously sequencing any number of hardware or virtual instruments.

Lately, I’ve been making music using synthesizers. Typically, I make music by performing a song on multiple synthesizers - in realtime - rather than recording separate pieces and assembling them in a digital audio workstation software (e.g. Ableton / Garageband). In order to perform on multiple synthesizers I use “sequencing” - a feature common to modern synthesizers which provide a way to preset when notes play.

Sequencing a synthesizer is often time consuming (you have to press one note at a time), and often unforgiving (a wrong note often means having to restart), but most importantly: only a single part of each song can be sequenced at a time due to onboard constraints (typically 64-128 notes).

I can avoid limitations of onboard sequencers, and at the same time unify all my instruments around a single sequencer by writing my own program. I decided to try this, by writing a program in Go that enables sequencing of multiple instruments simultaneously, with any number of parts and patterns. I call this software, miti, because I think of it as MIDI but with text (miti = musical instrument textual interface).

miti in action

miti is able to sequence any number of synthesizers from any type of computer (including Raspberry Pis) with low latency and low jitter.

miti also comes with a high-level language to write sequences. Here is a simple song I scripted which contains three parts - a melody, a chord, and an background counter arpeggio - for three instruments and two different patterns chained together. The sequencing language is stripped down and focuses only on notes, rests (the periods “.”), and the information about which instrument, tempo and pattern.

tempo 110

chain 1 2 2 2

pattern 1

instruments op-1

legato 20

Bb4 G4 Eb- Eb . . . . Bb4 G4 Eb- Eb . . . .

Bb4 G4 Eb- Eb . . . . Bb4 G4 Eb- Eb . . . .

G4 Eb4 C- C . . . . G4 Eb4 C- C . . . .

G4 Eb4 C- C . . . . G4 Eb4 C- C . . . .

pattern 2

instruments op-1

legato 40

Bb4 G4 Eb- Eb . . . . Bb4 G4 Eb- Eb . . . .

Bb4 G4 Eb- Eb . . . . Bb4 G4 Eb- Eb . . . .

G4 Eb4 C- C . . . . G4 Eb4 C- C . . . .

G4 Eb4 C- C . . . . G4 Eb4 C- C . . . .

instruments boutique

legato 90

Eb3GBb

D3FBb

C3EbG-

C3EbG

instruments nts-1

legato 50

Eb1 G Bb Eb G Bb Eb G Bb Eb G Bb Eb G Bb Eb G Bb

D1 F Bb D F Bb D F Bb D F Bb D F Bb D F Bb

C1 Eb G C Eb G C Eb G C Eb G C Eb G C Eb G

C1 Eb G C Eb G C Eb G C Eb G C Eb G C Eb G

And here is how it sounds:

If you are interested in using miti, check out the documentation. The rest of this article is about the design and problems inherent with realtime applications and using Go to tackle them.

Developing a MIDI sequencer

Developing a MIDI sequencer is somewhat daunting because of the realtime nature of music. A sequence off by a mere 40 milliseconds can be detected by most human listeners (in my experience, sometimes even 20 milliseconds can be detected by adept musicians). A program that causes notes to play with errors of tens of milliseconds is not viable, then.

MIDI messages are sent over USB and can require sending messages at transfer rates of 200 messages / second (when controlling three synthesizers emitting 1/8th notes at 240 bpm), and a single burst might involve 10 messages in a few milliseconds. The latency of a program needs to have millisecond latency within it to make sure timing stays accurate.

Using Go for a realtime audio app

I opted to use Go so I could make use of my knowledge of the language, as well as some language features (goroutines), and as well as make use of the already developed portmidi libraries.

A brief overview of my implementation is as follows.

I developed the program using different packages (a typically go pattern) - i.e. a metronome package, a midi package, a music package, a sequencer package - where each package encapsulates a certain domain of the sequencer. This turned out to be quite useful, especially when I decided to test other midi drivers and all I had to do was change an import statement.

The core of my sequencer is the metronome package defines a universal clock (using timer.NewTicker) which executes a callback function on each tick. The tick rate is set to the standard MIDI pulse rate - 24 pulses per quarter note. The sequencer takes the callback and determines whether notes should be emitted and then sends notes to another callback that is executed by the main program’s midi driver.

I decided to write the midi driver so that each instrument has its own dedicated thread, which works well if you don’t have too many instruments. So upon receiving a note from an instrument, it determines the write go channel and communicates the note. This is also easy to do in Go!

Overall I found that the program was easy to write, and optically, had low latency (as the internal timer and note emission doesn’t exceed ~2 milliseconds). I used GODEBUG=gctrace=1 and found that there were no garbage collection stop-the-world pauses that could introduce extra latency (a common criticism of using gc languages for realtime applications).

Chasing down jitter

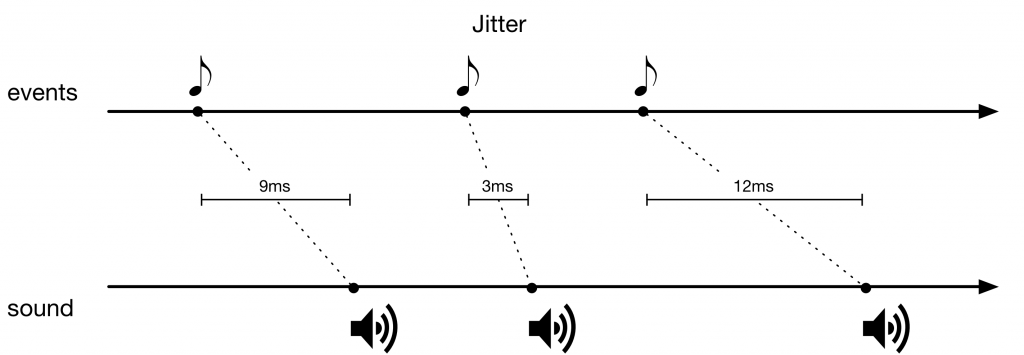

The biggest challenge with MIDI sequencing is called jitter. The jitter is amount of variation in time of the actual audio output of a sequence of notes from their originally intended sequence in time. For example, if the MIDI sequencer intends to play three notes at 60 bpm, at seconds 0.0, 1.0 and 2.0; then the jitter might cause the notes to actually play at 0.02, 0.98, and 2.05 seconds.

Jitter can be devastating if it is greater than 30 milliseconds, because it will be detectable by a human ear. I wanted to determine the jitter coming from this sequencer I wrote.

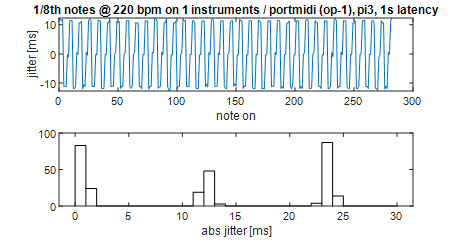

I set the sequencer to output a constant sequence of notes at a constant speed (1/8th notes at 220 bpm). During the sequence I recorded two separate sections, of about 10-20 seconds. I then aligned these sections and then calculated the millisecond difference between the rise of corresponding notes between the two separate recordings.

If there was no jitter, there would be no difference, but as you can see, there is a different sometimes.

I found that I could reduce the jitter to about 5 milliseconds, with rare spikes at 20 milliseconds by using a buffer in the MIDI calls (a feature built-in to portmidi). This was not bad. And using this quantification I was able to determine that the enemy of jitter can be a cheap synthesizer (a cheap synth I have adds about 20 milliseconds of jitter), and that, typically, the jitter doesn’t increase with the number of instruments.

Conclusion

With the C-bindings, I was happy to learn that Go works well as a realtime audio language, when it comes to MIDI at least. I’ve started using this program when making my own music. It’s free and open-source if you want to try it yourself.